I am trying to fit a lognormal distribution using Scipy. I've already done it using Matlab before but because of the need to extend the application beyond statistical analysis, I am in the process of trying to reproduce the fitted values in Scipy.

Below is the Matlab code I used to fit my data:

% Read input data (one value per line)

x = [];

fid = fopen(file_path, 'r'); % reading is default action for fopen

disp('Reading network degree data...');

if fid == -1

disp('[ERROR] Unable to open data file.')

else

while ~feof(fid)

[x] = [x fscanf(fid, '%f', [1])];

end

c = fclose(fid);

if c == 0

disp('File closed successfully.');

else

disp('[ERROR] There was a problem with closing the file.');

end

end

[f,xx] = ecdf(x);

y = 1-f;

parmhat = lognfit(x); % MLE estimate

mu = parmhat(1);

sigma = parmhat(2);

And here's the fitted plot:

Now here's my Python code with the aim of achieving the same:

import math

from scipy import stats

from statsmodels.distributions.empirical_distribution import ECDF

# The same input is read as a list in Python

ecdf_func = ECDF(degrees)

x = ecdf_func.x

ccdf = 1-ecdf_func.y

# Fit data

shape, loc, scale = stats.lognorm.fit(degrees, floc=0)

# Parameters

sigma = shape # standard deviation

mu = math.log(scale) # meanlog of the distribution

fit_ccdf = stats.lognorm.sf(x, [sigma], floc=1, scale=scale)

Here's the fit using the Python code.

As you can see, both sets of code are capable of producing good fits, at least visually speaking.

Problem is that there is a huge difference in the estimated parameters mu and sigma.

From Matlab: mu = 1.62 sigma = 1.29. From Python: mu = 2.78 sigma = 1.74.

Why is there such a difference?

Note: I have double checked that both sets of data fitted are exactly the same. Same number of points, same distribution.

Your help is much appreciated! Thanks in advance.

Other info:

import scipy

import numpy

import statsmodels

scipy.__version__

'0.9.0'

numpy.__version__

'1.6.1'

statsmodels.__version__

'0.5.0.dev-1bbd4ca'

Version of Matlab is R2011b.

Edition:

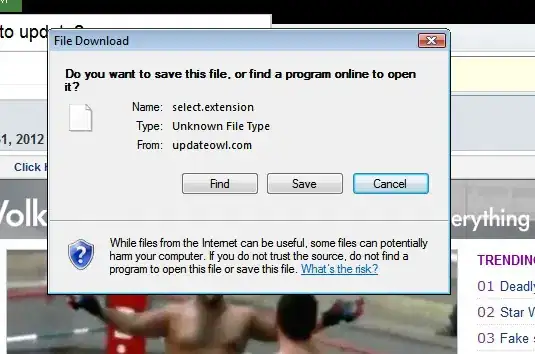

As demonstrated in the answer below, the fault lies with Scipy 0.9. I am able to reproduce the mu and sigma results from Matlab using Scipy 11.0.

An easy way to update your Scipy is:

pip install --upgrade Scipy

If you don't have pip (you should!):

sudo apt-get install pip