I need to compute tuples (of integers) of arbitrary (but the same) length into RGB values. It would be especially nice if I could have them ordered more-or-less by magnitude, with any standard way of choosing sub-ordering between (0,1) and (1,0).

Here's how I'm doing this now:

I have a long list of RGB values of colors.

colors = [(0,0,0),(255,255,255),...]I take the hash of the tuple mod the number of colors, and use this as the index.

def tuple_to_rgb(atuple): index = hash(atuple) % len(colors) return colors[index]

This works OK, but I'd like it to work more like a heatmap value, where (5,5,5) has a larger value than (0,0,0), so that adjacent colors make some sense, maybe getting "hotter" as the values get larger.

I know how to map integers onto RGB values, so perhaps if I just had a decent way of generating a unique integer from a tuple that sorted first by the magnitude of the tuple and then by the interior values it might work.

I could simply write my own sort comparitor, generate the entire list of possible tuples in advance, and use the order in the list as the unique integer, but it would be much easier if I didn't have to generate all of the possible tuples in advance.

Does anyone have any suggestions? This seems like something do-able, and I'd appreciate any hints to push me in the right direction.

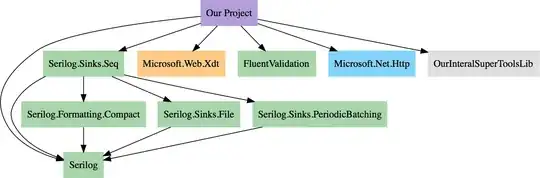

For those who are interested, I'm trying to visualize predictions of electron occupations of quantum dots, like those in Fig 1b of this paper, but with arbitrary number of dots (and thus an arbitrary tuple length). The tuple length is fixed in a given application of the code, but I don't want the code to be specific to double-dots or triple-dots. Probably won't get much bigger than quadruple dots, but experimentalists dream up some pretty wild systems.