I'm working on a project that has thousands of .cpp files plus thousands more .h and .hpp and the build takes 28min running from an SSD.

We inherited this project from a different company just weeks ago but perusing the makefiles, they explicitly disabled parallel builds via the .NOPARALLEL phony target; we're trying to find out if they have a good reason.

Worst case, the only way to speed this up is to use a RAM drive.

So I followed the instructions from Tekrevue and installed Imdisk and then ran benchmarks using CrystalDiskMark:

I also ran dd using Cygwin and there's a significant speedup (at least 3x) on the RAM drive compared to my SSD.

However, my build time changes not one minute!

So then I thought: maybe my proprietary compiler calls some Windows API and causes a huge slowdown so I built fftw from source on Cygwin.

What I expected is that my processor usage would increase to some max and stay there for the duration of the build. Instead, my usage was very spiky: one for each file compiled. I understand even Cygwin still has to interact with windows so the fact that I still got spiky proc usage makes me assume that it's not my compiler that's the issue.

Ok. New theory: invoking compiler for each source-file has some huge overhead in Windows so, I copy-pasted from my build-log and passed 45 files to my compiler and compared it to invoking the compiler 45 times separately. Invoking ONCE was faster but only by 4 secs total for the 45 files. And I saw the same "spiky" processor usage as when invoking compiler once for each file.

Why can't I get the compiler to run faster even when running from RAM drive? What's the overhead?

UPDATE #1 Commenters have been saying, I think, that the RAM drive thing is kind of unnecessary bc windows will cache the input and output files in RAM anyway. Plus, maybe the RAM drive implementation (ie drivers) is sub-optimal. So, I'm not using the RAM drive anymore.

Also, people have said that I should run the 45-file build multiple times so as to remove the overhead for caching: I ran it 4 times and each time it was 52secs.

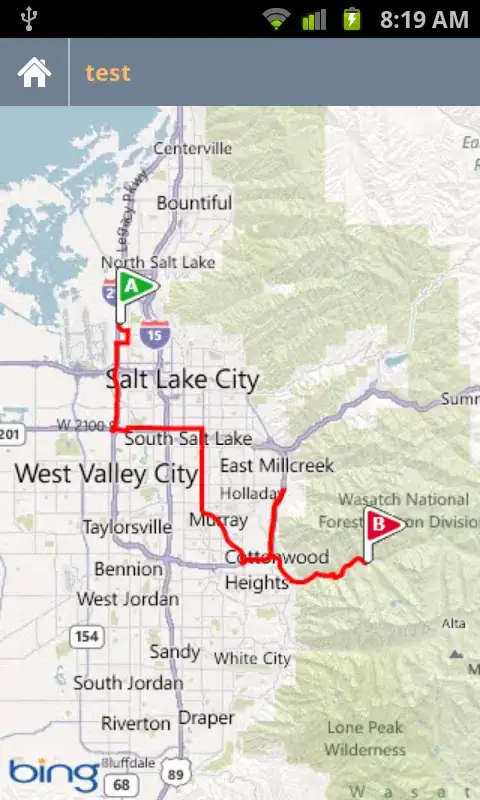

CPU usage (taken 5 secs before compilation ended)

Virtual memory usage

When the compiler spits out stuff to disk, it's actually cached in RAM first, right?

Well then this screenshot indicates that IO is not an issue or rather, it's as fast as my RAM.

When the compiler spits out stuff to disk, it's actually cached in RAM first, right?

Well then this screenshot indicates that IO is not an issue or rather, it's as fast as my RAM.

Question: So since everything is in RAM, why isn't the CPU % higher more of the time? Is there anything I can do to make single- threaded/job build go faster? (Remember this is single-threaded build for now)

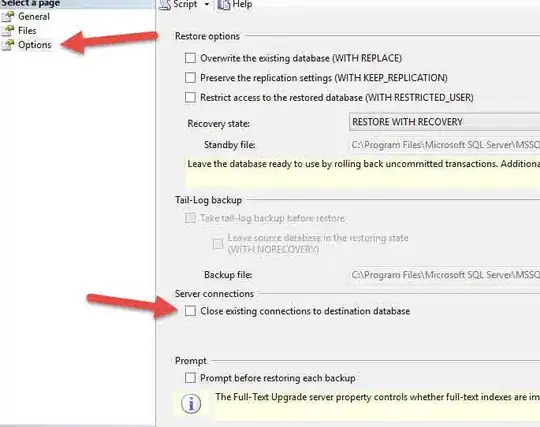

UPDATE 2 It was suggested below that I should set the affinity, of my compile-45-files invocation, to 1 so that windows won't bounce around the invocation to multiple cores. The result:

100% single-core usage! for the same 52secs

So it wasn't the hard drive, RAM or the cache but CPU that's the bottleneck.

**THANK YOU ALL! ** for your help

========================================================================

My machine: Intel i7-4710MQ @ 2.5GHz, 16GB RAM