I'm very new to Tensorflow, and I'm trying to train something using the inception v3 network for use in an iPhone app. I managed to export my graph as a protocolbuffer file, manually remove the dropout nodes (correctly, I hope), and have placed that .pb file in my iOS project, but now I am receiving the following error:

Running model failed:Not found: FeedInputs: unable to find feed output input

which seems to indicate that my input_layer_name and output_layer_name variables in the iOS app are misconfigured.

I see in various places that it should be Mul and softmax respectively, for inception v3, but these values don't work for me.

My question is: what is a layer (with regards to this context), and how do I find out what mine are?

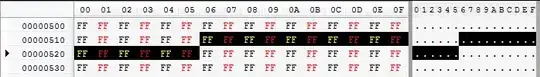

This is the exact definition of the model that I trained, but I don't see "Mul" or "softmax" present.

This is what I've been able to learn about layers, but it seems to be a different concept, since "Mul" isn't present in that list.

I'm worried that this might be a duplicate of this question but "layers" aren't explained (are they tensors?) and graph.get_operations() seems to be deprecated, or maybe I'm using it wrong.