Apologies if this seems trivial - relatively new to openCV.

Essentially, I'm trying to create a function that can take in a camera's image, the known world coordinates of that image, and the world coordinates of some other point 2, and then transform the camera's image to what it would look like if the camera was at point 2. From my understanding, the best way to tackle this is using a homography transformation using the warpPerspective tool.

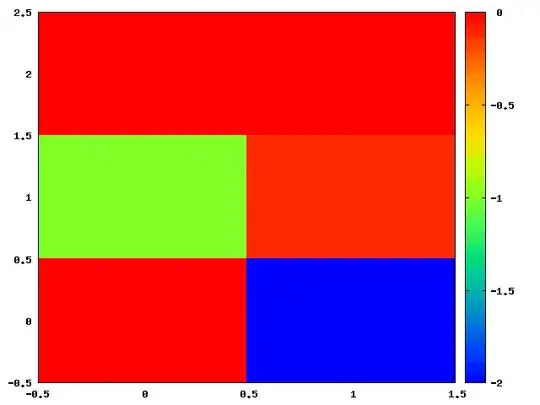

The experiment is being done inside the Unreal Game simulation engine. Right now, I essentially read the data from the camera, and add a set transformation to the image. However, I seem to be doing something wrong as the image is looking something like this (original image first then distorted image):

This is the current code I have. Basically, it reads in the texture from Unreal engine, and then gets the individual pixel values and puts them into the openCV Mat. Then I try and apply my warpPerspective transformation. Interestingly, if I just try a simple warpAffine transformation (rotation), it works fine. I have seen this questions: Opencv virtually camera rotating/translating for bird's eye view, but I cannot figure out what I am doing wrong vs. their solution. I would really appreciate any help or guidance any of you may have. Thanks in advance!

ROSCamTextureRenderTargetRes->ReadPixels(ImageData);

cv::Mat image_data_matrix(TexHeight, TexWidth, CV_8UC3);

cv::Mat warp_dst, warp_rotate_dst;

int currCol = 0;

int currRow = 0;

cv::Vec3b* pixel_left = image_data_matrix.ptr<cv::Vec3b>(currRow);

for (auto color : ImageData)

{

pixel_left[currCol][2] = color.R;

pixel_left[currCol][1] = color.G;

pixel_left[currCol][0] = color.B;

currCol++;

if (currCol == TexWidth)

{

currRow++;

currCol = 0;

pixel_left = image_data_matrix.ptr<cv::Vec3b>(currRow);

}

}

warp_dst = cv::Mat(image_data_matrix.rows, image_data_matrix.cols, image_data_matrix.type());

double rotX = (45 - 90)*PI / 180;

double rotY = (90 - 90)*PI / 180;

double rotZ = (90 - 90)*PI / 180;

cv::Mat A1 = (cv::Mat_<float>(4, 3) <<

1, 0, (-1)*TexWidth / 2,

0, 1, (-1)*TexHeight / 2,

0, 0, 0,

0, 0, 1);

// Rotation matrices Rx, Ry, Rz

cv::Mat RX = (cv::Mat_<float>(4, 4) <<

1, 0, 0, 0,

0, cos(rotX), (-1)*sin(rotX), 0,

0, sin(rotX), cos(rotX), 0,

0, 0, 0, 1);

cv::Mat RY = (cv::Mat_<float>(4, 4) <<

cos(rotY), 0, (-1)*sin(rotY), 0,

0, 1, 0, 0,

sin(rotY), 0, cos(rotY), 0,

0, 0, 0, 1);

cv::Mat RZ = (cv::Mat_<float>(4, 4) <<

cos(rotZ), (-1)*sin(rotZ), 0, 0,

sin(rotZ), cos(rotZ), 0, 0,

0, 0, 1, 0,

0, 0, 0, 1);

// R - rotation matrix

cv::Mat R = RX * RY * RZ;

// T - translation matrix

cv::Mat T = (cv::Mat_<float>(4, 4) <<

1, 0, 0, 0,

0, 1, 0, 0,

0, 0, 1, dist,

0, 0, 0, 1);

// K - intrinsic matrix

cv::Mat K = (cv::Mat_<float>(3, 4) <<

12.5, 0, TexHeight / 2, 0,

0, 12.5, TexWidth / 2, 0,

0, 0, 1, 0

);

cv::Mat warp_mat = K * (T * (R * A1));

//warp_mat = cv::getRotationMatrix2D(srcTri[0], 43.0, 1);

//cv::warpAffine(image_data_matrix, warp_dst, warp_mat, warp_dst.size());

cv::warpPerspective(image_data_matrix, warp_dst, warp_mat, image_data_matrix.size(), CV_INTER_CUBIC | CV_WARP_INVERSE_MAP);

cv::imshow("distort", warp_dst);

cv::imshow("imaage", image_data_matrix)