I'm using the next cost() and gradient() regularized functions:

def cost(theta, x, y, lam):

theta = theta.reshape(1, len(theta))

predictions = sigmoid(np.dot(x, np.transpose(theta))).reshape(len(x), 1)

regularization = (lam / (len(x) * 2)) * np.sum(np.square(np.delete(theta, 0, 1)))

complete = -1 * np.dot(np.transpose(y), np.log(predictions)) \

- np.dot(np.transpose(1 - y), np.log(1 - predictions))

return np.sum(complete) / len(x) + regularization

def gradient(theta, x, y, lam):

theta = theta.reshape(1, len(theta))

predictions = sigmoid(np.dot(x, np.transpose(theta))).reshape(len(x), 1)

theta_without_intercept = theta.copy()

theta_without_intercept[0, 0] = 0

assert(theta_without_intercept.shape == theta.shape)

regularization = (lam / len(x)) * np.sum(theta_without_intercept)

return np.sum(np.multiply((predictions - y), x), 0) / len(x) + regularization

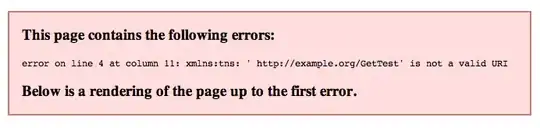

With these functions and scipy.optimize.fmin_bfgs() I'm getting next output ( which is almost correct ):

Starting loss value: 0.69314718056

Warning: Desired error not necessarily achieved due to precision loss.

Current function value: 0.208444

Iterations: 8

Function evaluations: 51

Gradient evaluations: 39

7.53668131651e-08

Trained loss value: 0.208443907192

Formula for Reguarization below. If I comment regularized inputs above scipy.optimize.fmin_bfgs() works well, and returns local optimum correctly.

Any ideas why?

UPDATE:

After additional comments , I updated cost and gradient regularization (in the code above). But this warning still appear (new outputs above). scipy check_grad function return next value: 7.53668131651e-08.

UPDATE 2:

I'm using set UCI Machine Learning Iris data. And based on Classification model One-vs-All training first resuls for Iris-setosa.