One way to do it is with panda's sum function:

In [1]: import pandas as pd

...: d = {'col1': [1,2,3,4,5], 'col2': [['a'],['a','b','c'],['d'],['e'],['a','e','d']]}

...: df = pd.DataFrame(data=d)

In [2]: df['col2'].sum()

Out[2]: ['a', 'a', 'b', 'c', 'd', 'e', 'a', 'e', 'd']

However, itertools.chain.from_iterable is much faster:

In [3]: import itertools

...: list(itertools.chain.from_iterable(df['col2']))

Out[3]: ['a', 'a', 'b', 'c', 'd', 'e', 'a', 'e', 'd']

In [4]: %timeit df['col2'].sum()

92.7 µs ± 1.03 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

In [5]: %timeit list(itertools.chain.from_iterable(df['col2']))

20.4 µs ± 2.62 µs per loop (mean ± std. dev. of 7 runs, 100000 loops each)

In my testing, itertools.chain.from_iterable can be up to 30x faster for larger dataframes (~1000 rows). Another option is

import functools

import operator

functools.reduce(operator.iadd, df['col2'], [])

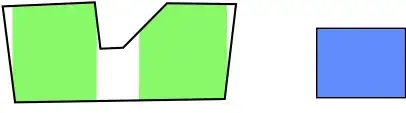

which is pretty much equally as fast as itertools.chain.from_iterable. I made a graph for all of the answers that were posted:

(The x-axis is the length of the dataframe)

As you can see, everything using sum or functools.reduce with operators.add is unusable, with np.concat being slightly better. However, the three winners by far are itertools.chain, itertool.chain.from_iterable, and functools.reduce with operators.iadd. They take almost no time. Here is the code used to produce the plot:

import functools

import itertools

import operator

import random

import string

import numpy as np

import pandas as pd

import perfplot # see https://github.com/nschloe/perfplot for this awesome library

def gen_data(n):

return pd.DataFrame(data={0: [

[random.choice(string.ascii_lowercase) for _ in range(random.randint(10, 20))]

for _ in range(n)

]})

def pd_sum(df):

return df[0].sum()

def np_sum(df):

return np.sum(df[0].values)

def np_concat(df):

return np.concatenate(df[0]).tolist()

def functools_reduce_add(df):

return functools.reduce(operator.add, df[0].values)

def functools_reduce_iadd(df):

return functools.reduce(operator.iadd, df[0], [])

def itertools_chain(df):

return list(itertools.chain(*(df[0])))

def itertools_chain_from_iterable(df):

return list(itertools.chain.from_iterable(df[0]))

perfplot.show(

setup=gen_data,

kernels=[

pd_sum,

np_sum,

np_concat,

functools_reduce_add,

functools_reduce_iadd,

itertools_chain,

itertools_chain_from_iterable

],

n_range=[10, 50, 100, 500, 1000, 1500, 2000, 2500, 3000, 4000, 5000],

equality_check=None

)