I am working on a multiple classification problem and after dabbling with multiple neural network architectures, I settled for a stacked LSTM structure as it yields the best accuracy for my use-case. Unfortunately the network takes a long time (almost 48 hours) to reach a good accuracy (~1000 epochs) even when I use GPU acceleration. The resulting accuracy and loss functions are:

At this point, giving the good performance but the very slow training I suspect a bug in my code. I tested it using the golden tests mentioned here, which consist of running tests with 2 points only either in the testing set or the training set along with eliminating the dropouts. Unfortunately, the outputs of these runs result in testing accuracy better than the training accuracy, which should not be the case as far as I know. I suspect that I am shaping my data in the wrong way. Any hints, suggestions and advises are appreciated.

My code is the following:

# -*- coding: utf-8 -*-

import keras

import numpy as np

from time import time

from utils import dmanip, vis

from keras.models import Sequential

from keras.layers import LSTM, Dense

from keras.utils import to_categorical

from keras.callbacks import TensorBoard

from sklearn.preprocessing import LabelEncoder

from tensorflow.python.client import device_lib

from sklearn.model_selection import train_test_split

###############################################################################

####################### Extract the data from .csv file #######################

###############################################################################

# get data

data, column_names = dmanip.get_data(file_path='../data_one_outcome.csv')

# split data

X = data.iloc[:, :-1]

y = data.iloc[:, -1:].astype('category')

###############################################################################

########################## init global config vars ############################

###############################################################################

# check if GPU is used

print(device_lib.list_local_devices())

# init

n_epochs = 1500

n_comps = X.shape[1]

###############################################################################

################################## Keras RNN ##################################

###############################################################################

# encode the classification labels

le = LabelEncoder()

yy = to_categorical(le.fit_transform(y))

# split the dataset

x_train, x_test, y_train, y_test = train_test_split(X, yy, test_size=0.35,

random_state=True,

shuffle=True)

# expand dimensions

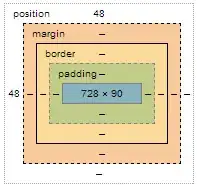

x_train = np.expand_dims(x_train, axis=2)

x_test = np.expand_dims(x_test, axis=2)

# define model

model = Sequential()

model.add(LSTM(units=n_comps, return_sequences=True,

input_shape=(x_train.shape[1], 1),

dropout=0.2, recurrent_dropout=0.2))

model.add(LSTM(64, return_sequences=True, dropout=0.2, recurrent_dropout=0.2))

model.add(LSTM(32, dropout=0.2, recurrent_dropout=0.2))

model.add(Dense(4 ,activation='softmax'))

# print model architecture summary

print(model.summary())

# compile model

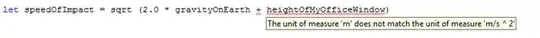

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Create a TensorBoard instance with the path to the logs directory

tensorboard = TensorBoard(log_dir='./logs/rnn/{}'.format(time()))

# fit the model

history = model.fit(x_train, y_train, epochs=n_epochs, batch_size=100,

validation_data=(x_test, y_test), callbacks=[tensorboard])

# plot results

vis.plot_nn_stats(history=history, stat_type="accuracy", fname="RNN-accuracy")

vis.plot_nn_stats(history=history, stat_type="loss", fname="RNN-loss")

My data is a large 2d matrix (38607, 150), where 149 is the number of features and 38607 is the number of samples, with a target vector including 4 classes.

feat1 feat2 ... feat148 feat149 target

1 2.250 0.926 ... 16.0 0.0 class1

2 2.791 1.235 ... 1.0 0.0 class2

. . . . . .

. . . . . .

. . . . . .

38406 2.873 1.262 ... 281.0 0.0 class3

38407 3.222 1.470 ... 467.0 1.0 class4