I have an application where I have to detect the presence of some items in a scene. The items can be rotated and a little scaled (bigger or smaller). I've tried using keypoint detectors but they're not fast and accurate enough. So I've decided to first detect edges in the template and the search area, using Canny ( or a faster edge detection algo ), and then match the edges to find the position, orientation, and size of the match found.

All this needs to be done in less than a second.

I've tried using matchTemplate(), and matchShape() but the former is NOT scale and rotation invariant, and the latter doesn't work well with the actual images. Rotating the template image in order to match is also time consuming.

So far I have been able to detect the edges of the template but I don't know how to match them with the scene.

I've already gone through the following but wasn't able to get them to work (they're either using old version of OpenCV, or just not working with other images apart from those in the demo):

https://www.codeproject.com/Articles/99457/Edge-Based-Template-Matching

Angle and Scale Invariant template matching using OpenCV

https://answers.opencv.org/question/69738/object-detection-kinect-depth-images/

Can someone please suggest me an approach for this? Or a code snipped for the same if possible ?

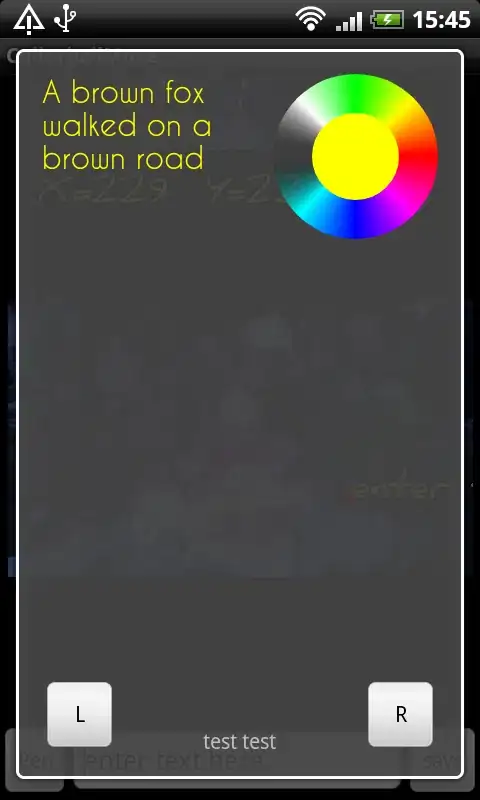

This is my sample input image ( the parts to detect are marked in red )

These are some software that are doing this and also how I want it should be: