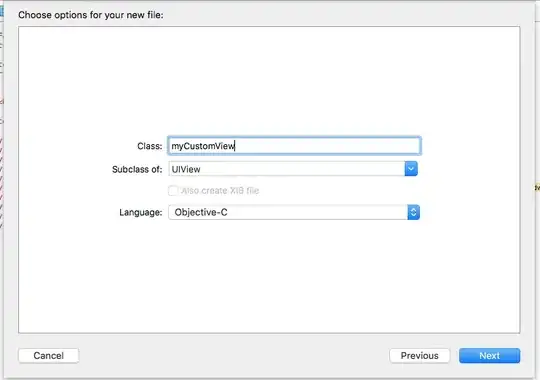

Update: now with a image of one of the more than 6600 target-pages: https://europa.eu/youth/volunteering/organisation/48592 see below - the images and the explanation and description of the aimed goals and the data which are wanted.

I am a pretty new in the field of data work in the field of volunteering services. Any help is appreciated. I have learned a lot in the past few days from some coding heroes such as αԋɱҽԃ αмєяιcαη and KunduK.

Basically our goal is to create a quick overview on a set of opportunities for free volunteering in Europe. I have the list of the URL which I want to use to fetch the data. I can do for one url like this:- currently working on a hands on approach to dive into python programming: i have several parser-parts that work already - see below a overview on several pages. BTW: I guess that we should gather the info with pandas and store it in csv...

- https://europa.eu/youth/volunteering/organisation/50160

- https://europa.eu/youth/volunteering/organisation/50162

- https://europa.eu/youth/volunteering/organisation/50163

...and so forth and so forth .... - [note - not every URL and id is backed up with a content-page - therefore we need an incremental n+1 setting] therefore we can count the pages each by each - and count incremental n+1

See examples:

- https://europa.eu/youth/volunteering/organisation/48592

- https://europa.eu/youth/volunteering/organisation/50160

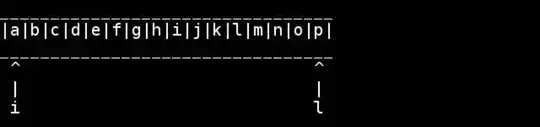

Approach: I used CSS Selector; XPath and CSS Selector do same task but - with both BS or lxml we can use this or mix with find() and findall().

So I run this mini-approach here:

from bs4 import BeautifulSoup

import requests

url = 'https://europa.eu/youth/volunteering/organisation/50160'

resonse = requests.get(url)

soup = BeautifulSoup(resonse.content, 'lxml')

tag_info = soup.select('.col-md-12 > p:nth-child(3) > i:nth-child(1)')

print(tag_info[0].text)

Output: Norwegian Judo Federation

Mini-approach 2:

from lxml import html

import requests

url = 'https://europa.eu/youth/volunteering/organisation/50160'

response = requests.get(url)

tree = html.fromstring(response.content)

tag_info = tree.xpath("//p[contains(text(),'Norwegian')]")

print(tag_info[0].text)

Output: Norwegian Judo Federation (NJF) is a center organisation for Norwegian Judo clubs. NJF has 65 member clubs, which have about 4500 active members. 73 % of the members are between ages of 3 and 19. NJF is organized in The Norwegian Olympic and Paralympic Committee and Confederation of Sports (NIF). We are a member organisation in European Judo Union (EJU) and International Judo Federation (IJF). NJF offers and organizes a wide range of educational opportunities to our member clubs.

and so forth and so fort. What I am trying to achieve: aimed is to gather all the interesting information from all the 6800 pages - this means information, such as:

- the URL of the page and all the parts of the page that are marked red

- Name of Organisation

- Address

- DESCRIPTION OF ORGANISATION

- Role

- Expiring date

- Scope

- Last updated

- Organisation Topics ( not on every page noted: occasionally )

...and iterate to the next page, getting all the information and so forth. So I try a next step to get some more experience:... to gather info form all of the pages Note: we've got 6926 pages

The question is - regarding the URLs how to find out which is the first and which is the last URL - idea: what if we iterate from zero to 10 000!?

With the numbers of the urls!?

import requests

from bs4 import BeautifulSoup

import pandas as pd

numbers = [48592, 50160]

def Main(url):

with requests.Session() as req:

for num in numbers:

resonse = req.get(url.format(num))

soup = BeautifulSoup(resonse.content, 'lxml')

tag_info =soup.select('.col-md-12 > p:nth-child(3) > i:nth-child(1)')

print(tag_info[0].text)

Main("https://europa.eu/youth/volunteering/organisation/{}/")

but here i run into issues. Guess that i have overseen some thing while combining the ideas of the above mentioned parts. Again. I guess that we should gather the infos with pandas and store it in csv...