I ran into this issue today when using to_parquet with partition_cols. My workaround was to run:

import pandas as pd

import pyarrow as pa

import pyarrow.parquet as pq

df = pd.read_csv('box.csv',parse_dates=True)

df['SETTLEMENTDATE'] = pd.to_datetime(df['SETTLEMENTDATE'])

df['Date'] = df['SETTLEMENTDATE'].dt.date

# convert to pyarrow table

df_pa = pa.Table.from_pandas(df)

pq.write_to_dataset(df_pa,

root_path = 'nem.parquet',

partition_cols = ['Date'],

basename_template = "part-{i}.parquet",

existing_data_behavior = 'delete_matching')

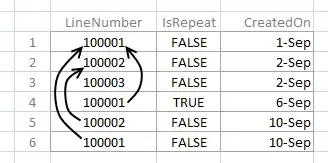

The key argument here being existing_data_behavior which:

Controls how the dataset will handle data that already exists in the

destination. The default behaviour is ‘overwrite_or_ignore’.

‘overwrite_or_ignore’ will ignore any existing data and will overwrite

files with the same name as an output file. Other existing files will

be ignored. This behavior, in combination with a unique

basename_template for each write, will allow for an append workflow.

‘error’ will raise an error if any data exists in the destination.

‘delete_matching’ is useful when you are writing a partitioned

dataset. The first time each partition directory is encountered the

entire directory will be deleted. This allows you to overwrite old

partitions completely. This option is only supported for

use_legacy_dataset=False.

run using:

pyarrow==10.0.1