I have a permissions table with 3 column, id, tenantId, and serviceIds, which is text[] array column with ~100 values in average

| id | tenantId | actionServiceIds |

|---|---|---|

| 247584 | 748391 | ["azure","aws,"kfc","facebook"] |

| 247584 | 748384 | ["google","kfc",facebook] |

tenantId and id is my primary key. and there are around ~ 100 different tenants I want to get the distinct count of the service ids for all the ids while filtering on the tenantId column. so for the above example, with no tenant filter I should get

| id | count |

|---|---|

| 247584 | 3 |

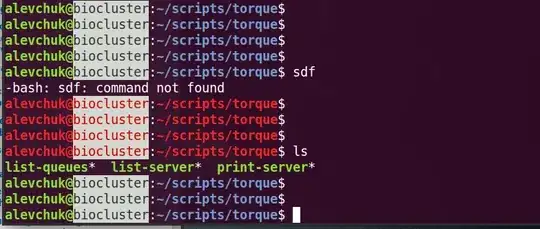

a naïve version will be something like:

select id, count(distinct actionServiceId)

from permissions, unnest(permissions.actionServiceIds) AS

actionServiceId

where tenantId in ('748384')

group by id

the problem is that most of the time, the required tenants contain a large portion of the tenants, so when the table grows to 100k~ records the unnest and the distinct count group by turns extremally slow. I tried to create a different table with the result of the unnest, but still, with poor results, What is the best approach to this problem? is the unnest group by is the way to go? I can't create materialized view because of the different tenantIds combinations, and I need this table to be around 2~3 million rows long.

attached explained output for 800K rows table.

"GroupAggregate (cost=35819.11..149428.28 rows=81335 width=28) (actual time=769.575..103786.215 rows=86002 loops=1)"

" Group Key: permissions5.id"

" -> Nested Loop (cost=35819.11..133225.28 rows=3077930 width=52) (actual time=767.163..15702.908 rows=34721705 loops=1)"

" -> Gather Merge (cost=35819.11..71666.68 rows=307793 width=76) (actual time=767.111..1312.921 rows=308032 loops=1)"

" Workers Planned: 2"

" Workers Launched: 2"

" -> Sort (cost=34819.09..35139.70 rows=128247 width=76) (actual time=673.584..801.961 rows=102677 loops=3)"

" Sort Key: permissions5.id"

" Sort Method: external merge Disk: 8776kB"

" Worker 0: Sort Method: external merge Disk: 8744kB"

" Worker 1: Sort Method: external merge Disk: 8776kB"

" -> Parallel Seq Scan on permissions5 (cost=0.00..18236.75 rows=128247 width=76) (actual time=0.165..165.462 rows=102677 loops=3)"

" Filter: ((tenantid)::text = ANY ('{d697dcc6-313c-4923-abe8-32b919932488d8861516-b846-49ae-9dda-30c1a3589fef,89a7b752-a12c-4a82-a5de-4b897762b387,7ef84b4c-8c08-43aa-8ee8-522ebd69c617,2a87b660-97e1-4229-8b97-a796874830d0,012fce86-f73e-4358-82ce-b040ffb44067}'::text[]))"

" Rows Removed by Filter: 142381"

" -> Function Scan on unnest actionserviceid (cost=0.00..0.10 rows=10 width=32) (actual time=0.031..0.036 rows=113 loops=308032)"

"Planning Time: 0.194 ms"

"Execution Time: 103802.397 ms"