I am getting that RandomCrop isn't putting the padding when I create my images. Why is it?

Reproducible script 1

todo with cifar...

Reproducible script 2: code:

def check_size_of_mini_imagenet_original_img():

import random

import numpy as np

import torch

import os

seed = 0

os.environ["PYTHONHASHSEED"] = str(seed)

torch.manual_seed(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

np.random.seed(seed)

random.seed(seed)

import learn2learn

batch_size = 5

kwargs: dict = dict(name='mini-imagenet', train_ways=2, train_samples=2, test_ways=2, test_samples=2)

kwargs['data_augmentation'] = 'lee2019'

benchmark: learn2learn.BenchmarkTasksets = learn2learn.vision.benchmarks.get_tasksets(**kwargs)

tasksets = [(split, getattr(benchmark, split)) for split in splits]

for i, (split, taskset) in enumerate(tasksets):

print(f'{taskset=}')

print(f'{taskset.dataset.dataset.transform=}')

for task_num in range(batch_size):

X, y = taskset.sample()

print(f'{X.size()=}')

assert X.size(2) == 84

print(f'{y.size()=}')

print(f'{y=}')

for img_idx in range(X.size(0)):

visualize_pytorch_tensor_img(X[img_idx], show_img_now=True)

if img_idx >= 5: # print 5 images only

break

# visualize_pytorch_batch_of_imgs(X, show_img_now=True)

print()

if task_num >= 4: # so to get a MI image finally (note omniglot does not have padding at train...oops!)

break

break

break

and

def visualize_pytorch_tensor_img(tensor_image: torch.Tensor, show_img_now: bool = False):

"""

Due to channel orders not agreeing in pt and matplot lib.

Given a Tensor representing the image, use .permute() to put the channels as the last dimension:

ref: https://stackoverflow.com/questions/53623472/how-do-i-display-a-single-image-in-pytorch

"""

from matplotlib import pyplot as plt

assert len(tensor_image.size()) == 3, f'Err your tensor is the wrong shape {tensor_image.size()=}' \

f'likely it should have been a single tensor with 3 channels' \

f'i.e. CHW.'

if tensor_image.size(0) == 3: # three chanels

plt.imshow(tensor_image.permute(1, 2, 0))

else:

plt.imshow(tensor_image)

if show_img_now:

plt.tight_layout()

plt.show()

images here: https://github.com/learnables/learn2learn/issues/376#issuecomment-1319368831

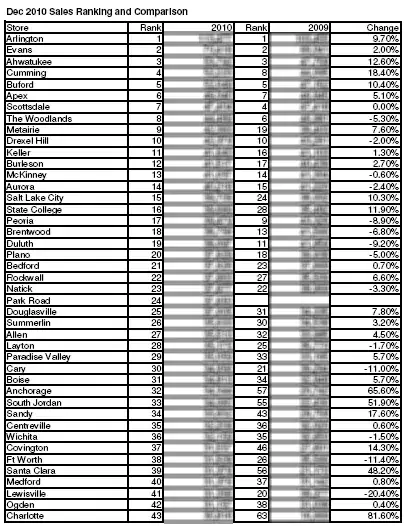

first one:

I am getting odd images despite printing the transform the data is using:

-- splits[i]='train'

taskset=<learn2learn.data.task_dataset.TaskDataset object at 0x7fbc38345880>

taskset.dataset.dataset.datasets[0].dataset.transform=Compose(

ToPILImage()

RandomCrop(size=(84, 84), padding=8)

ColorJitter(brightness=[0.6, 1.4], contrast=[0.6, 1.4], saturation=[0.6, 1.4], hue=None)

RandomHorizontalFlip(p=0.5)

ToTensor()

Normalize(mean=[0.47214064400000005, 0.45330829125490196, 0.4099612805098039], std=[0.2771838538039216, 0.26775040952941176, 0.28449041290196075])

)

but when I use this instead:

train_data_transform = Compose([

RandomResizedCrop((size - padding*2, size - padding*2), scale=scale, ratio=ratio),

Pad(padding=padding),

ColorJitter(brightness=0.4, contrast=0.4, saturation=0.4),

RandomHorizontalFlip(),

ToTensor(),

Normalize(mean=mean, std=std),

])

it seems to work:

why don't both have the 8 and 8 padding on both sides I expect?

why don't both have the 8 and 8 padding on both sides I expect?

I tried seeing the images with mini-imagenet for torch-meta and it also didn't seem the padding was there:

task_num=0

Compose(

RandomCrop(size=(84, 84), padding=8)

RandomHorizontalFlip(p=0.5)

ColorJitter(brightness=[0.6, 1.4], contrast=[0.6, 1.4], saturation=[0.6, 1.4], hue=[-0.2, 0.2])

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

X.size()=torch.Size([25, 3, 84, 84])

The code is much harder to make compact and reproducible but you can see my torchmeta_plot_images_is_the_padding_there ultimate-utils library.

For now since 2 data sets say that padding is not being inserted despite the transform saying it should be I am concluding there is a bug in pytorch or my pytorch version or I just don't understand RandomCrop. But the description is clear to me:

padding (int or sequence, optional) –

Optional padding on each border of the image. Default is None. If a single int is provided this is used to pad all borders.

and the normal padding Pad(...) says something very similar:

padding (int or sequence) –

Padding on each border. If a single int is provided this is used to pad all borders.

so what else could go wrong? The bottom img I provided with a pad is done with the above Pad() function not with RandomCrop.

cross: