I am working on a Panography / Panorama application in OpenCV and I've run into a problem I really can't figure out. For an idea of what a panorama photograph looks like, have a look here at the Panography Wikipedia article: http://en.wikipedia.org/wiki/Panography

So far, I can take multiple images, and stitch them together while making any image I like a reference image; here's a little taster of what I mean.

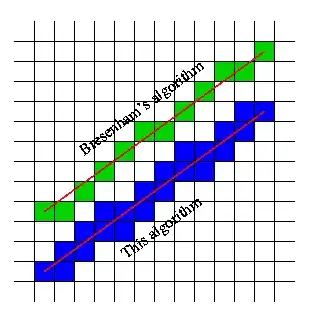

However, as you can see - it has a lot of issues. The primary one I am facing is that the images are getting cut (re: far right image, top of images). To highlight why this is happening, I'll draw the points that have been matched, and draw lines for where the transformation will end up:

Where the left image is the reference image, and the right image is the image after it's been translated (original below) - I have drawn the green lines to highlight the image. The image has the following corner points:

TL: [234.759, -117.696]

TR: [852.226, -38.9487]

BR: [764.368, 374.84]

BL: [176.381, 259.953]

So the main problem I have is that after the perspective has been changed the image:

Suffers losses like so:

Now enough images, some code.

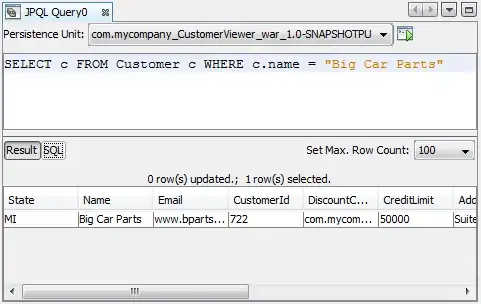

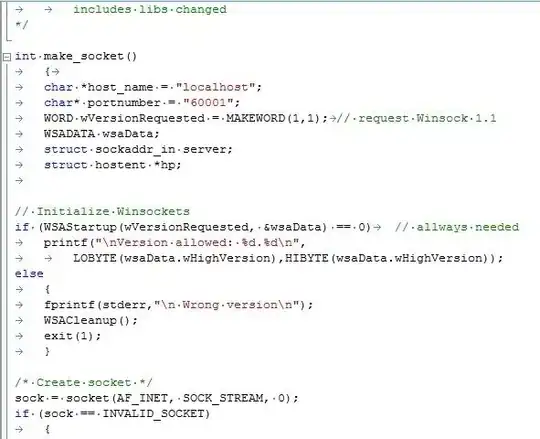

I'm using a cv::SurfFeatureDetector, cv::SurfDescriptorExtractor and cv::FlannBasedMatcher to get all of those points, and I calculate the matches and more importantly good matches by doing the following:

/* calculate the matches */

for(int i = 0; i < descriptors_thisImage.rows; i++) {

double dist = matches[i].distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

/* calculate the good matches */

for(int i = 0; i < descriptors_thisImage.rows; i++) {

if(matches[i].distance < 3*min_dist) {

good_matches.push_back(matches[i]);

}

}

This is pretty standard, and to do this I followed the tutorial found here: http://opencv.itseez.com/trunk/doc/tutorials/features2d/feature_homography/feature_homography.html

To copy images atop of one another, I use the following method (where img1 and img2 are std::vector< cv::Point2f >)

/* set the keypoints from the good matches */

for( int i = 0; i < good_matches.size(); i++ ) {

img1.push_back( keypoints_thisImage[ good_matches[i].queryIdx ].pt );

img2.push_back( keypoints_referenceImage[ good_matches[i].trainIdx ].pt );

}

/* calculate the homography */

cv::Mat H = cv::findHomography(cv::Mat(img1), cv::Mat(img2), CV_RANSAC);

/* warp the image */

cv::warpPerspective(thisImage, thisTransformed, H, cv::Size(thisImage.cols * 2, thisImage.rows * 2), cv::INTER_CUBIC );

/* place the contents of thisImage in gsThisImage */

thisImage.copyTo(gsThisImage);

/* set the values of gsThisImage to 255 */

for(int i = 0; i < gsThisImage.rows; i++) {

cv::Vec3b *p = gsThisImage.ptr<cv::Vec3b>(i);

for(int j = 0; j < gsThisImage.cols; j++) {

for( int grb=0; grb < 3; grb++ ) {

p[j][grb] = cv::saturate_cast<uchar>( 255.0f );

}

}

}

/* convert the colour to greyscale */

cv::cvtColor(gsThisImage, gsThisImage, CV_BGR2GRAY);

/* warp the greyscale image to create an image mask */

cv::warpPerspective(gsThisImage, thisMask, H, cv::Size(thisImage.cols * 2, thisImage.rows * 2), cv::INTER_CUBIC );

/* stitch the transformed image to the reference image */

thisTransformed.copyTo(referenceImage, thisMask);

So, I have the coordinates of where the warped image is going to end up, I have the points that create the homogeneous matrix that's used for these transformations - but I can't figure out how I should go about translating these images so they can't get cut up. Any help or pointers are very appreciated!